When updating the model pricing in commit

|

||

|---|---|---|

| src/llm_chat | ||

| tests | ||

| .gitignore | ||

| LICENSE | ||

| Makefile | ||

| README.md | ||

| llm-chat.png | ||

| poetry.lock | ||

| pyproject.toml | ||

README.md

LLM Chat

This project is currently a CLI interface for OpenAI's GPT model API. The long term goal is to be much more general and interface with any LLM, whether that be a cloud service or a model running locally.

Usage

Installation

The package is not currently published so you will need to build it yourself. To do so you will need Poetry v1.5 or later. Once you have that installed, run the following commands:

git clone https://code.harrison.sh/paul/llm-chat.git

cd llm-chat

poetry build

This will create a wheel file in the dist directory. You can then install it with pip:

pip install dist/llm_chat-0.6.1-py3-none-any.whl

Note, a much better way to install this package system wide is to use pipx. This will install the package in an isolated environment and make the llm command available on your path.

Configuration

Your OpenAI API key must be set in the OPENAI_API_KEY environment variable. The following additional environment variables are supported:

| Variable | Description |

|---|---|

OPENAI_MODEL |

The model to use. Defaults to gpt-3.5-turbo. |

OPENAI_TEMPERATURE |

The temperature to use when generating text. Defaults to 0.7. |

OPENAI_HISTORY_DIR |

The directory to store chat history in. Defaults to ~/.llm_chat. |

The settings can also be configured via command line arguments. Run llm chat --help for more information.

Usage

To start a chat session, run the following command:

llm chat

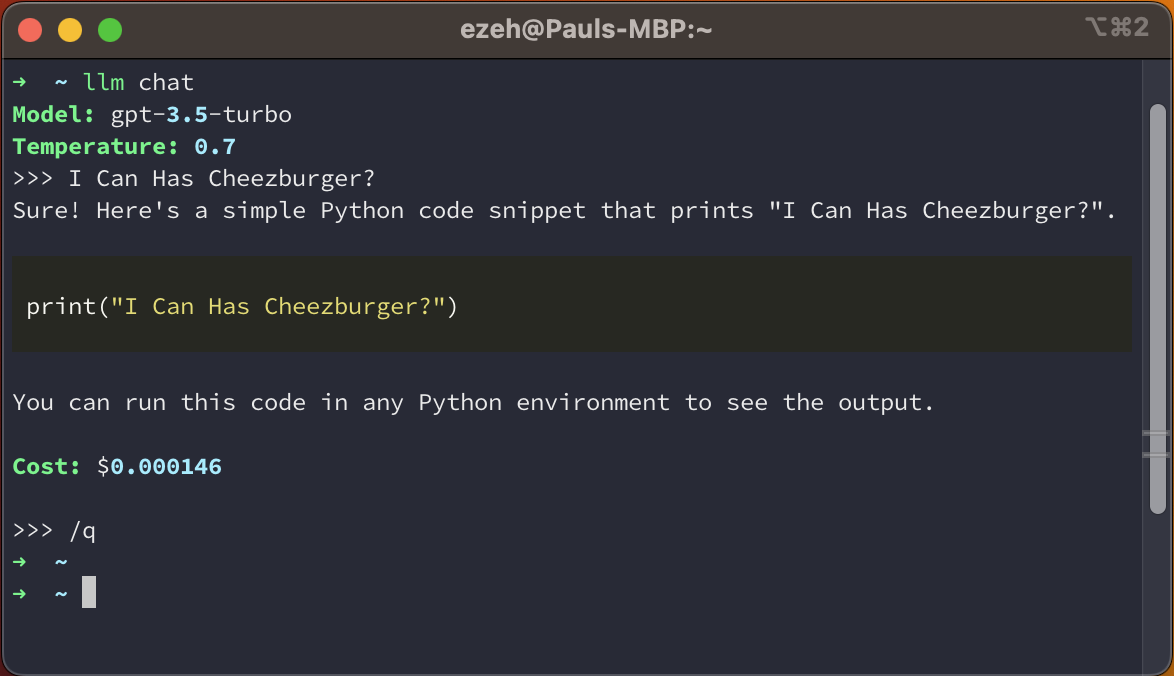

This will start a chat session with the default settings looking something like the image below:

The CLI accepts multi-line input, so to send the input to the model press Esc + Enter. After each message a running total cost will be displayed. To exit the chat session, send the message /q.

There are several additional options available:

--contextallows you to provide one or more text files as context for your chat, which is sent as an initial system message at the start of the conversation. You can provide the argument multiple times to add multiple files.--namegives your chat session a name. This is used to name the history file.

See llm chat --help for a full description of all options and their usage.

Finally, you can load a previous chat session using the llm load command. For example:

llm load /path/to/session.json

See llm load --help for more information.